Praised be the Doomsayers

The human race will go extinct if AI has its way!

They point to the wonders of Generative AI (LLM) as proof of this.

We

are lauding the power of something which doesn’t understand the meaning of a

single word. It uses associations of words. You give it a prompt, it looks in

its large dataset for a piece of text that matches. If it stopped there, it

would be relatively harmless, because someone with an Unconscious Mind had

written that piece of text, and it flows – the words are in grammatical order

and the clumping of objects is in accordance with the meanings of the words.

But

it doesn’t stop there – it attempts to cobble together other pieces of text,

and this is where the problems

begin. It is using associations – it has a word in one piece of text, it finds

the same word in another piece of text, so they must mean the same thing –

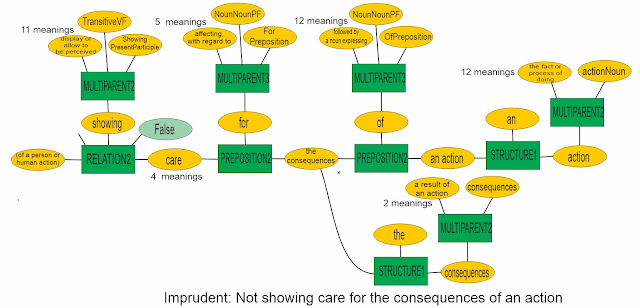

right? No, many words can be either a noun or a verb, a few words can represent

up to seven parts of speech. A word that only has a single part of speech can

easily have a dozen different meanings, some starkly different, some nuanced.

“Open”, as an adjective, has thirty-one meanings – an open door, an open

letter, an open secret, my door is always open, he is open to new ideas.

Generative AI knows nothing about any of this.

So who is

proposing to put the fate of humanity in the hands of this unreliable toy, with

its passion for hallucinating and cobbling together crazy quilts (the cobbling

together of unrelated things)? It is not hallucinating, it is doing what it has

been told to do – it is just that the instructions bear no relevance to the

complex world it is operating in.

|

| Cobbling a Crazy Quilt |

So

why don’t we build something that does understand the meaning of words?

Our

excuse seems to be that we tried that before and it didn’t work. There is a

reason for that. Textual analysis is too difficult for our conscious mind, with

its Four Pieces Limit, to handle, so we hand off the

problem to our Unconscious Mind, which can handle more than four things in play

at once. It doesn’t tell us how it does it, because we wouldn’t understand.

The

challenge then is to build something which we won’t understand in toto, because

there are too many moving parts. But it is needed, to handle problems which

also have too many moving parts for us to understand. And there are lots of

those problems. Climate Change is an obvious one, or coming down a notch or

two, many economic problems are beyond the comprehension of an economist with a

Four Pieces Limit.

Can

we do it? Of course we can, we just keep working on the facets until it stirs

into life. Our version is called Active Structure. It represents somewhere

about a hundred to a thousand times the complexity of an LLM. Google said they

spent $2 billion on their LLM – will we really need to spend hundreds of

billions on a machine which understands meaning? People are spending or betting

hundreds of billions on LLM – 20 billion for Snapchat, a valuation of a

trillion on Nvidia. This is one area where ideas aren’t created by cash, but by

banging your head on a brick wall until it breaks (the wall, that is). If you

are going to bang your head, it helps to understand the problem before you do

so. Many people skip this step.

Will

it be safe, trustworthy, loyal? It will be up to us to give it a good, deep

education (because it will be able to read and understand stuff), and make sure

it hypothesises about its actions (when there is time to do so).

Fortunately,

it will have many uses before it takes over the world – one of which is

stopping us making horrendous mistakes on legislation (Robodebt) and complex

specifications (too many to mention, and some very simple ones).

Comments

Post a Comment