Bing’s Disclaimer

Bing is powered

by AI, so … mistakes are possible.

Bing’s dsclaimer set me thinking – why are we doing this?

Is it a way of using stupid AI to defang the threat of AI.

We can say – AI isn’t so bad, it is no threat, look how stupid it is. We are

not going to give it the keys to the kingdom (the nuclear codes).

Our company is interested in developing AI that doesn’t make

mistakes – AGI (Artificial General Intelligence). To do that, the AGI has to understand what words mean, and how

words connect to each other,

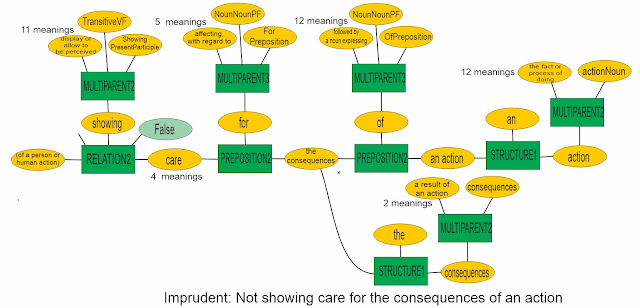

An example – imprudent is defined as “Not showing care for

the consequences of an action”

Why make it that – couldn’t we just say “Not prudent”?

English is a living language, and words are pressed into service to convey

meanings they were not created to handle.

Words accrete new meanings, and the existing meanings drift,

or become out of date. And a human has to know how words fit together into larger

structures. An LLM can’t be bothered with any of this – more exactly, it would

come at a high development cost, when more profit can be had by using marketing

to push an inferior product. See “It thinks like an expert”.

What would be the purpose of AGI – why are “no mistakes”

important.

In no particular order:

Boeing 737 MAX – many dead through venality, incompetence,

and greed. Layers of human-based protection found wanting.

F-35 Project – a convincing demonstration of how not to run

a complex project.

Voyager – let’s send a message to point its antenna away

from Earth.

The surgeon amputates the wrong leg – ego overrides common

sense.

Humans make lots of mistakes – they have a Four Pieces

Limit, and they have an unwillingness to change their thinking after about the

age of 25 (the stomach ulcers affair was a good example of this, but humans

turn conservative as they age, as a way of resisting having to discard

shibboleths and rebuild their cognitive structure anew as the world changes).

Is it sensible to introduce, and spend billions on

propagating, deeply compromised AI in the form of LLMs? No

Will humans do it anyway? Yes

Does AGI have a future? Let’s hope so, and let’s hope it is

soon. It looks like predictions about Climate Change are already demonstrating

the Four Pieces Limit – if it is too complicated to think about, we can’t think

about it effectively, or not on a reasonable time scale.

We will know we are being serious about it when we put the

same effort into teaching a machine the English language as we do with our

children (teaching our children is much easier, because they have the same apparatus).

Comments

Post a Comment